"Never stop testing, and your advertising will never stop improving." —David Ogilvy

If you were going to send the first OkCupid message to a potential date, how many versions would you come up with? And how would you test to see which one was the best one?

Fortunately, there is no market for online dating message testing (not yet, anyway). But split-testing, also known as A/B-testing, has been big business for marketers since the middle of the 20th century. Ogilvy & Mather used A/B-testing to famously rise to the top of the marketing world, and today Neil Patel continues to preach the good news.

Multivariate testing, on the other hand, is a more recent and complex affair. Theoretically, there is no upper limit to the number of combinations that could be tested, making it an extremely powerful alternative to traditional split-testing.

Testing platforms, such as Optimizely, offer both types of tests.

But most businesses, especially SMBs launching their first site, are hesitant about testing. They're interested in it, but they don't know the differences between the two tests. Their common questions may include the following:

- How much does it cost?

- How long does it take?

- What do I have to do?

- Can I really trust the results?

Using examples, let's go over three ways A/B-testing and multivariate-testing differ. (Along the way, I'll answer each of those four questions as well.)

1. Amount of Traffic

Let's say you make goat-milk soap and you sell it for $5 a bar. Your site has, on a good day, 300 visitors. Your average is about 150 per day. That means you get 1,050 visitors on any given week. Not bad.

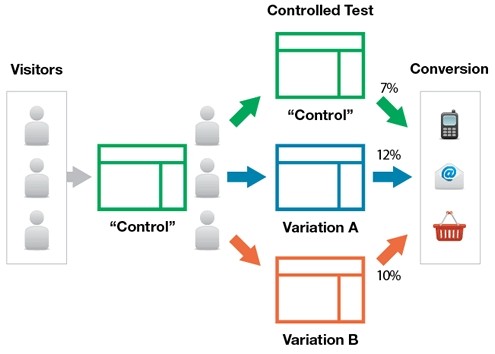

But you have aspirations of establishing a brick-and-mortar shop, and you want to improve conversions to get there. So you set up an A/B/C test (one in which three variations of a page are tested) for your homepage. In one variation, you have a picture of a bar of soap and a blue background; in another, a goat and a green background; and in the third, you and your family against a white background. The copy corresponds to the imagery.

The A/B/C test runs for a week, and your weekly number of visitors is divided by three across the three variations (see image, below). Instead of receiving 1,050 visitors to one page, you'd receive 350 visitors on each page over the course of the week. Or, put another way, the "winning" site will receive just one-third of your typical traffic while you're testing, which means the potential loss of two-thirds of your traffic (directed toward the "losing" sites).

Source: Shine InfoTech

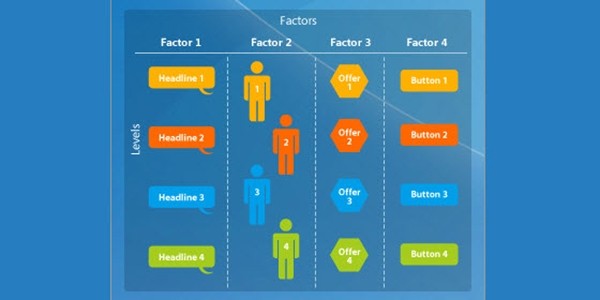

Now let's reimagine the scenario. Instead of three variations, you want to test combinations of four elements on each page to see how well they complement one another. 4! is 24 variations... and 1,050/24 is a very small number.

Takeaway: When you have low traffic, A/B-testing works way better, because multivariate tests require a lot of traffic to return viable results.

2. Overall Design vs. Individual Elements

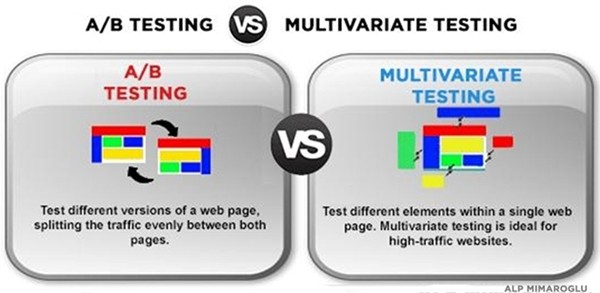

Source: Pro Blog Design

There is a general misconception that A/B tests have only two variations, or that multivariate tests refer to any split test with more than two variations. Not so. For example, an A/B/C/D test is still a type of A/B test and not a multivariate test.

That brings us to the purpose of A/B tests versus multivariate tests.

A/B tests are useful when competing page designs are up for debate. For example, in the soap-maker scenario, the owner used an A/B/C test to validate one of three variations—all of which were unique and different.

A multivariate test, on the other hand, attempts to validate individual elements or combinations of elements rather than entire pages. The page design itself is templated, and individual page elements are simply swapped around (see image above). It is a much more complex and analytical method of testing, and it can conclusively rank specific page elements. In an ideal world.

In reality, multivariate-testing is hard to execute because it requires a lot more thoughtfulness than A/B-testing. It requires substantially higher page traffic just to run it, but it also requires more user input. Nonsense combinations that would drive away business must be eliminated from the pool, and results should be interpreted by a professional analyst. Eyeballing the results could simply lead to false positives.

Takeaway: Multivariate-testing is harder to execute and analyze than A/B-testing. Because there are more variables at play, there is more risk involved.

3. Time Required

The third key difference is the time required to obtain viable results from each test.

Let's use our soap-maker again as an example. If he were to run an A/B test with 1,000 weekly visitors, it would take him only two weeks to get a substantial, testable number of visitors to each variation of her page (1,000 each). If she ran an A/B/C/D test, it would take her four weeks to get 1,000 visitors to each variation.

But if she opted to go with a multivariate test for four elements of her homepage (again, 4! = 24 variations), it would take her 24 weeks, or roughly six months, to get 1,000 visitors to each variation. By then, the amount of business she would have lost by directing visitors to bad sites could have potentially put her out of business (only 1/24 of her visitors would have visited the "winning" site).

Takeaway: In an ideal world, multivariate-testing would be better. But in the real world, A/B-testing will probably get you where you need to go—cheaper and faster.